Microsoft's AI Head Fake and Making Google Dance

Welcome to Tidal Wave, an investment and research newsletter about software, internet, and media businesses. If you haven’t subscribed yet, please do so below:

Microsoft’s AI Head Fake

The discussion around Microsoft’s recent endeavors in AI and investment in OpenAI has largely been focused on their self-declared war on Google Search. It’s an engrossing narrative. A sleepy incumbent (Google) is being outflanked by one of its arch-rivals. And you have Satya dropping fire quotes like this in interviews:

“I hope with our innovation they will definitely want to come out and show that they can dance. I want people to know that we made them dance.” — Satya Nadella, Microsoft CEO

Here’s the thing. If Search was really the thing Microsoft was going after, the last thing a prudent CEO would do is go on every news outlet and scream, “We’re going after them. And just as heads up, we’re going to put in an aggressive bid to become the default search engine in iOS”.

Microsoft’s seemingly hell-bent focus on taking share from Google Search will likely turn out to be nothing more than a head fake.

For one thing, Microsoft knows its problem in search is rooted in distribution, not product quality or features. And of all companies, Microsoft is the least likely to be heedless of the fact that competing with an incumbent with a massive distribution advantage is unlikely to bear fruit.

Being the default position in search matters – and Microsoft is very disadvantaged in that category. Google dominates the desktop and mobile markets with its default position.

Adding a new feature on Q&A to address a small percentage of queries is unlikely to meaningfully move the needle in terms of getting Microsoft the distribution it needs.

Even more puzzling would be the fact that search advertising is not even an attractive end-market for Microsoft anymore. The company has other more important growth vectors (Azure, Github, LinkedIn, Office365), all of which Microsoft is far better positioned to win than in Search.

Focus and Making Google Dance

Don’t get me wrong, Nadella is earnest when he says he wants to make Google dance. But making “them dance” is primarily a mechanism to capture the attention of the two constituencies that have underpinned Microsoft's strategy for the last few decades: developers and businesses.

And if, along the way, that means creating chaos at Google, that’s a cherry on the top. Who doesn’t like it when their old boss returns and starts shipping code?

Microsoft's main priorities are really elsewhere. Over the next 10 years, Microsoft’s revenue growth and equity story will predominantly be focused on just two things:

Growing and taking share with Azure. As it relates to AI, that means ensuring Microsoft is the leading platform for developers to build, train, and deploy their AI models and applications.

Expanding within the enterprise and business customers. As it relates to AI, that means up-leveling their suite of applications with features (e.g., generative AI, a summary of meeting notes).

Over the next decade, ~90% of Microsoft's revenue growth will come from these two areas. Nothing else really moves the needle for the company. Microsoft knows this; coincidentally, 80-90% of Nadella’s performance stock awards in FY ‘22 were tied to those two things.

Bing could 2-3x revenue scale, and it would only moderately move the needle for Microsoft, especially when you take into account the revenue share the company would need to cede to become the default search engine on Apple et al12.

All this is to say that the marketing blitz that Microsoft orchestrated over the last few months was to:

Co-opt the leading AI company’s brand (OpenAI)

Position Azure to being the leading AI and ML platform for developers

Highlight (coming) AI capabilities of Microsoft’s applications to their business customers

If Google should be worried about anything, it's really that Microsoft is outflanking them with regard to developer mindshare3 and the next-generation application suite.

Microsoft and Developers:

Developers have always been core to Microsoft’s strategy, especially around platform shifts. During the PC era, that meant convincing developers to build applications for the Windows OS. And during the browser wars, Gates was intensely focused4 on ensuring developers built to the Internet Explorer standard.

From Microsoft’s 1997 Planning Memo

Windows won the desktop OS battle because it had more applications than any other platform. We must make sure that the best Web applications and content become available for IE users first…

There are 6 million developers worldwide who use Microsoft development tools and technology. This is one of our key assets against Netscape. We must help them (ISVs and corporate developers) write to the ActiveX platform, so they develop the rich base of Web applications and controls that establishes the value of the platform.

The strategy fell flat, and the company more or less missed the internet wave. And a decade later, would miss the mobile wave. After missing both platform shifts, Microsoft was able to catch the cloud computing wave.

For all intents and purposes, we're undergoing another platform5 shift driven by the maturation of AI and ML technology. For this platform shift, Microsoft's primary focus is to drive AI and compute workloads to Azure with an assist from OpenAI.

And Microsoft’s pitch to developers is simple: Azure will be the best platform to build, train, and fine-tune AI models and applications.

From Nadella's 2022 Ignite Keynote Presentation

We have built the next-generation supercomputers in Azure that are being used by us, OpenAI, as well as customers like Meta, to train some of the largest and most powerful AI models.

Azure provides almost 2x higher compute throughput per GPU and near-linear scaling to thousands of GPUs, thanks to world-class networking and system software optimization. And for inferencing, Azure is more cost-effective than other clouds, delivering up to 2x the performance per dollar.

In Azure, we also offer the best tools across the machine learning lifecycle from data preparation to model management. Data scientists and machine learning engineers can use Azure Machine Learning to build, train, deploy, and operate large-scale AI models at scale — Nadella, Microsoft CEO

Microsoft's primary forms of value capture for this platform shift are Azure and the developer tooling around Github/VSCode (e.g., Github Co-Pilot).

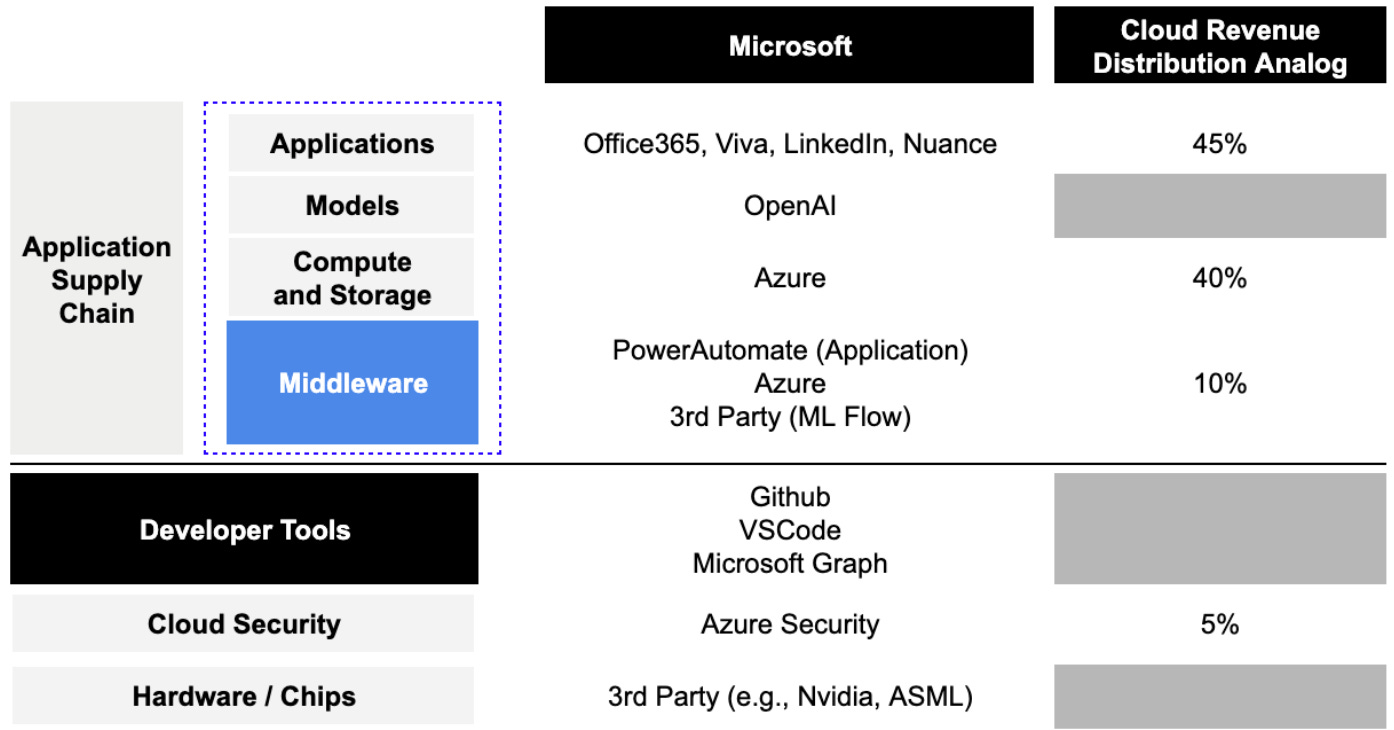

How much value is going to be captured in this next wave is still to be determined. But if we were to use the distribution of cloud spend as a proxy, it would imply that the infrastructure layer captures at least 40% of the total value, and the model layer will capture some % of the value that previously accrued the application layer.

The “supply chain” or stack for AI applications will likely look something like this:

For Microsoft, the goal is quite clear: incentivize and evangelize Azure, OpenAI, Github, and VSCode as the standard for developers building AI applications.

Standards in New Technology Markets

In many ways, this strategy is working. Has any company in recent memory received as much earned media as Microsoft has?6 More importantly, ChatGPT seems to have captured the minds of developers – at least for the time being.

Microsoft is fully aware of the importance of blitzing the developer market early. In new technology markets, companies and developers tend to centralize around “standards” quickly.

From The Gorilla Game by Geoffrey A. Moore

Hypergrowth markets, in order to scale up quickly, will often spontaneously standardize on the products from a single vendor. This simplifies the issue of creating new standards, building compatible systems, and getting a whole new set of product and service providers up to speed quickly on the new solution set…Everyone wants its products because they are setting the new standard. Its competitors by contrast, must fight an uphill battle just to get considered. It makes for a huge competitive advantage.

For companies and developers, it’s a simple resource and time allocation decision. When implementing new technology, there are “economies of learning,” and once you learn/familiarize yourself with one vendor and tech stack, the bar to learn and implement another is very high, and a poor allocation of time during a period of rapid growth.

Microsoft’s strategy to help turn Azure and OpenAI into a standard is two-fold:

Create a tight integration between Azure and OpenAI that drives superior performance relative to the other vendors.

Offer the lowest price for the core model tokens. After the company’s most recent price cut, OpenAI’s API cost 1/10 of its competitors7.

The main drawback of Azure’s current approach is that while OpenAI’s APIs are marketed as “General Availability,” getting access to the API is quite restrictive (by developer standards). Microsoft says that’s primarily because of their responsible AI policies. Open-source first platforms (e.g., Hugging Face) allied with the other cloud vendors may inevitably outflank Azure on this8.

Applications, Enterprise, and Beyond

The other focus area for Microsoft is using OpenAI's “primitives” to up-level their current application suite to expand with its enterprise customers and appeal to a new generation of businesses.

OpenAI’s primitives (so far) are:

ChatGPT – LLM that can understand and generate natural language.

Codex – the language to code model, which powers Github Copilot.

Dall-E – the text-to-image generation model.

Whisper – the speech recognition and transcription model.

Microsoft is integrating each of these primitives into the company’s many applications (i.e., Office, Teams, Dynamics365) with the goal of driving increased adoption of the company’s application suite. In classic Microsoft fashion, the company will likely raise the bundle price in a few years to capture the value of the new features.

From Nadella's 2022 Ignite Keynote Presentation

We envision a world where everyone, no matter their profession, can have an experience like this for everything they do. That includes sales. We are applying conversational intelligence to Viva Sales to transcribe customer calls, and automatically identify highlights and critical follow-ups to ensure better customer service. And Dynamics 365 is using the same capabilities to help sellers identify new opportunities and suggest next steps to close deals

If you missed it, Microsoft used Whisper and ChatGPT to launch a Gong competitor. Is building a Gong competitor really going to move the needle for Microsoft? No.

But it’s a step in moving towards owning the productivity and collaboration stack for businesses – enterprise, SMB, old and new. Historically, Microsoft has dominated the legacy and enterprise IT/productivity market. But while Microsoft was transitioning its Suite to the cloud in the mid-2010s, G-Suite and a new generation of “core office” applications took hold, especially on the low end of the market. The company's investments in the Microsoft Graph, Office adjacent tools (e.g., Power Automate), and now AI features may make the product more appealing to more types of companies where AI features might have the most immediate impact.

Overtime, we should expect Microsoft to (1) expand the primitives, (2) continue to integrate the primitives into its application suite, and (3) more aggressively market its application suite.

For companies adjacent to the productivity suite, Microsoft’s intense focus on up-leveling their application stack with OpenAI primitives should be a Code Red.

If you’re finding this newsletter interesting, share it with a friend, and consider subscribing if you haven’t already.

Always feel free to drop me a line at ardacapital01@gmail.com if there’s anything you’d like to share or have questions about.

Not to mention that Google-owned Chrome is the dominant browser on the desktop.

For reference, Apple's payment from Google for the default search position is larger than Bing’s total revenue.

Though the event was not set up as developer-focused, I was shocked by the absolute lack of emphasis on serving developers in the Bard keynote.

To the point of inviting antitrust scrutiny.

The word “platform” is a bit overused, so Ben Thompson’s characterization of this as an “epoch” is probably more accurate.

Not all of it was good (i.e., Sydney), but early adopters probably do not care.

The competitive advantage here is meaningful because training and building models to OpenAI’s performance level can be capital intensive. By lowering the price one can charge for the models, OpenAI is effectively attempting to pull up the ladder behind it.

This would be quite ironic since Google has been slow-rolling Deepmind because of their “AI Principals”, which inevitably allowed Microsoft (+ OpenAI) to beat them market.