Google I/O, Bard, and Implications for Search

Welcome to Tidal Wave, a research newsletter about software, internet, and media businesses. And as always, if you’ve been forwarded this email (you know who you are), you can become a subscriber by clicking the link below.

Google recently held its annual developer conference, Google I/O. During the event, the company announced several new AI features and products. Importantly, Google showcased its ChatGPT competitor, Bard, and how it plans to incorporate generative AI into search.

Throughout his keynote, CEO Sundar Pichai hammered home one key point: Google’s products always had AI, and Generative AI is simply the next iteration. In each of the feature announcements, Pichai highlighted how AI was already incorporated into Google’s products (e.g., Magic Eraser).

The company was keen to make the point that they have been doing AI & ML for a while; in fact, many of the key technical breakthroughs that have enabled LLMs came from Google. This slide should assuage the concerns of some investors that were fearful that Google would have to ramp up opex to “catch up” to OpenAI.

The party line is that Google is just realizing those investments now, with Generative AI being rolled out throughout all of the company’s apps. Since the majority of the features were largely expected and similar to things that Microsoft announced around the Office Suite, the focus of this post will largely be on the announcements related to Bard and Search.

Bard as a Digital Personal Assistant

Bard is Google’s response to ChatGPT (and BingChat, to an extent). Just like ChatGPT, Bard allows users to ask questions and prompt Bard to do tasks with natural language. And unlike the company’s Paris demo, the responses seem to have been error-free1.

Bard already integrates with other Google services and apps, which allows users to prompt actions from Bard and export responses to those apps, e.g., Docs. Similar to Microsoft, Google is trying to weave its suite services together around a common knowledge graph facilitated by generative AI.

Importantly, Google also announced a number of 3rd party plugins that Bard will be able to interact with directly. Users can prompt the plug-ins to do things directly within Bard.

Bard will be able to tap into all kinds of services across the web with extensions from incredible partners like Instacart, Indeed, Khan Academy, and many more.

For example, users can prompt Adobe Firefly to generate images.

It’s kind of like giving every human the ability to call APIs directly from the Bard and stitch together their personal workflows. With the plug-ins and integration with Google apps, Google is positioning Bard to be a super-powered “personal assistant” for users on the web. The strategy is similar to ChatGPT’s plug-ins with one key difference: Google is enabling users to interact with Bard directly through Search. The integration is important because the “personal assistant” ties directly with how Google is incorporating generative AI into search.

What’s Next for Search

One of the most awaited discussions was around Search. How was Google going to incorporate generative AI into search without hurting core search profits? There are two components to this question:

What will be the revenue impact?

What’s the cost of incorporating that type of search?

Revenue Implications

In its demo, Google provides a few examples to showcase how it plans to incorporate Generative AI into search.

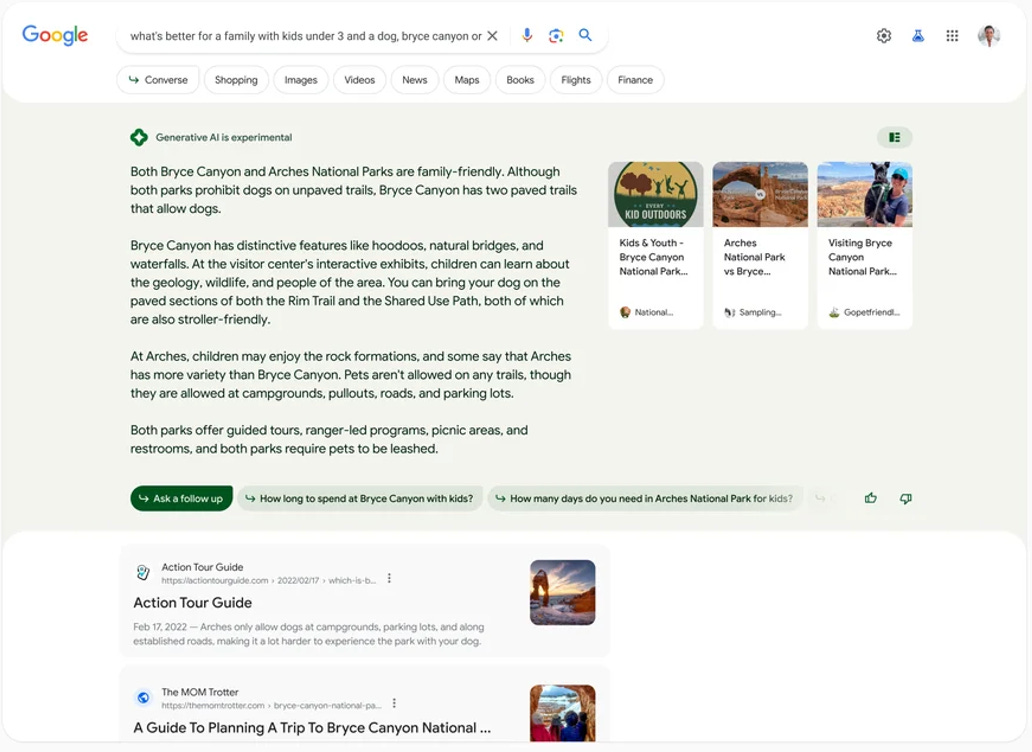

In the first example, the user searches for an answer to the question, “What’s better for a family with kids under 3 and a dog, Bryce Canyon or Arches?”. The new search results include a response created by generative AI and traditional search results.

The Generative AI response takes up nearly ½ of the initial page:

Real estate in search results (i.e., ad slots) is incredibly valuable digital real estate. And based on the implementation, it would seem that the implementation is expensive as the company is ceding ~1 ⁄ 2 of the real estate to generative AI. However, if you run the same query in Google today…

…you will notice a few things: (1) the top result is the same, (2) ¼ of the page is already being used for Q&A recommendations (“People also ask”), and (3) most importantly, there are no ads. This type of query falls into the category of non-monetized Google queries.

Per Google, this is what the majority of search queries are:

Nearly all of the ads you see are on searches with commercial intent, such as searches for “sneakers,” “t-shirt” or “plumber.” We’ve long said that we don’t show ads--or make money--on the vast majority of searches. In fact, on average over the past four years, 80 percent of searches on Google haven’t had any ads at the top of search results. Further, only a small fraction of searches--less than 5 percent--currently have four top text ads.

So the net revenue impact on this set of queries is likely nil as there were no ads to cannibalize.

The second example Google demoed falls into the “commercial intent” category. In this example, the user searches for “good bike for 5 mile commute with hills”. The results include a generative AI response but, importantly, preserve the “four top” ad slots by placing them above the generative AI responses.

Below the sponsored search is a summary of the AI-generated search results that the users can use to research and view more results. From a display perspective, the AI-generated results do not take any more digital real estate than Google's current iteration of “featured snippets.” The generative AI response goes a step further because it incorporates multiple web pages instead of a single source.

So really, the implementation of “Chat into Search” does not appear to be as negative as people had feared (or at least the stock price suggested). Not much ad space is cannibalized, and the personal assistant opportunity provides Google with some additional optionality.

All of the noise Microsoft made around Search will likely turn out to be for naught. As I’ve written about before, I think we’ll look back at Satya’s infamous “make them dance” as simply a way to grab developer and user attention to say that Microsoft, too, is an AI company.

Microsoft’s seemingly hell-bent focus on taking share from Google Search will likely turn out to be nothing more than a head fake.

For one thing, Microsoft knows its problem in search is rooted in distribution, not product quality or features. And of all companies, Microsoft is the least likely to be heedless of the fact that competing with an incumbent with a massive distribution advantage is unlikely to bear fruit.

Since Microsoft’s declaration of war on Google Search…

…Bing’s market share actually went down.

…Microsoft made some headlines when Samsung was said to have “consider” replacing Google with Bing as the default provider. Samsung abandoned those discussions last week.

But the biggest indictment of Microsoft’s lack of real focus on search is that you can still only use Bing AI on Microsoft Edge. For most Chrome and Safari users, that means forgoing all Chrome Extensions, saved passwords, payment information, bookmarks, etcetera2. On the other hand, Google has made Bard available across all browsers. In many ways, this episode demonstrated the strength of Google’s moat around search. Yes, there’s a technology component to it, but the reach, distribution, and ecosystem around Search is an insurmountable lead.

Furthermore, enabling the chat interface in Google search may actually create ways for Google to better monetize the previously unmonetized “non-commercial” queries.

Google’s AI-powered search results has four components:

The AI-generated responses.

Ability to ask “follow-ups,” which is powered by Bard.

Ability to view the citations that generated the AI response.

Traditional search results.

The “follow-up” interface takes users to a full-page view of Bard.

The natural evolution of the Bard seems to be to turn it into a “personal assistant” that combines Search with plugins and generative AI.

With a personal assistant flow, Google could suggest follow-ups to queries that do not immediately have “commercial intent.” For a user researching travel plans to Bryce Canyon, the natural next step would be to book flights or hotels around Bryce Canyon. Sundar alluded to this on the most recent earnings call:

The main area maybe I'm excited by is we do know from experience that users come back to Search. They follow on. They're engaging back on stuff they already did. And so for us, to use LLMs in a way we can serve those use cases better, I think it's a real opportunity.

For example, Bard’s plug-ins with TripAdvisor and Kayak will likely allow users to do some component of that within Bard. And maybe Google will enable direct flight bookings via Bard in the future? Or the ability to buy directly within Bard?

Turning Bard into a personal assistant presents a new way for Google to extend Search. Also, Bard, by virtue of being native to Search, will have billions of end-users. And a large portion of those users will have their payment and personal info linked to their Google profiles. All of which incentivize developers to build and invest in plug-ins for Bard.

How exactly Bard will be monetized is speculation at this point. Maybe it's simply API fees, take rates on payments facilitated via Bard, or “sponsored” follow-ups.

But once rolled out, Bard will have a number of advantages over ChatGPT. For one, Bard will be subsidized by Google’s ad model, and “premium” features will be free. ChatGPT does not yet have that luxury and charges $20 per user per month to use “premium” features. As a result of that and Google’s already massive reach, Bard will have significantly more users touching the product, which means it's more attractive for developers.

No doubt, there will be more competition to become the AI personal assistant. There’s Siri and Alexa waiting in the background. It may be that each assistant owns a certain channel, i.e., Siri for iOS. But there are going to be tensions. It will be interesting to see if and how Apple cedes default Search to Google in a world where a percentage of the responses will be “Bard” results.

Net-net, Google’s approach appears to preserve the monetization ability of the key “commercial intent” queries and increase optionality to monetize the other 80% of queries it does not already monetize with Bard.

Cost of Implications of Incorporating Gen AI in Search

On the cost of things, investors were concerned that incorporating generative AI into search would increase the cost of queries. But quantifying the cost-to-query is challenging, to say the least. Most of the attempts to do so have assumed fixed cost per query and applied that to some “uptake” of LLM-based queries.

In practice, it will not be a linear relationship for a few reasons:

(1) The majority of search queries are simply not phrased in a manner that requires a generative AI response. You can probably proxy that by cross-referencing the top 1k searches vs. the % that show suggested questions.

(2) Cost of the query is decreasing as improvements are made to the models.

(3) Most analyses ignore the impact of caching. When someone searches “Youtube” in Google (one of the most popular queries), Google does not re-run that search query. It will show cached results, which helps reduce latency and compute costs. The historical cache hit rate for Google is between 30-60%. Google does not provide much public data on its search queries, but an analysis done on AOL’s search queries found that caching the top 1M queries captures ~60% of all queries.

Additionally, based on the demo, it seems that Google is using a 2-step retrieval process for incorporating generative AI into Search. When someone searches for a question, Google will find the top search results, and the LLM analyzes the top search results and provides an answer based on the top results with references. The goal of this “grounding” approach is to:

…avoid incorrect answers by grounding the data on a handful of sources. LLMs are known to hallucinate and provide answers to questions that are incorrect.

…provide timely information. The data that LLMs are trained on quickly becomes stale. For example, ChatGPT only has information up until Sep. 2021. This two-step approach allows Bard to use the LLMs in conjunction with more timely information.

…prevent two different people from getting two different answers to “What’s better for a family with kids under 3 and a dog, Bryce Canyon or Arches?”.

Google has not (yet) disclosed if they cache queries, but it seems likely, and Bard seems to agree. Here’s Bard’s response when I ask, “Do you cache queries?”

Yes, Bard caches queries. This means that when you ask me a question, I will first check to see if I have already answered a similar question. If I have, I will return the cached answer. This can help to improve the speed and accuracy of my responses.

The cache is stored in memory, so it is only available for a limited time. If you ask me a question that I have not answered before, or if the cache has expired, I will need to query the underlying data sources to get an answer.

Caching queries can be a helpful way to improve the performance of a large language model like Bard. It can help to reduce the amount of time it takes to respond to queries, and it can also help to improve the accuracy of responses by reducing the number of times I need to query the underlying data sources.

In contrast, here’s ChatGPT’s response

As an AI language model, I don't have direct access to user information or the ability to cache queries. I don't have memory of past interactions or queries unless they are provided to me within the context of the current conversation. Each user interaction is treated as a separate and independent session. Consequently, I can't recall or remember any information from previous sessions or queries. My purpose is to provide information and assist with inquiries to the best of my abilities based on the input I receive in the current conversation.

It’s only logical. LLMs are probabilistic and can produce different results each go around. But you do not want to present different users with different responses for the same search query. And you definitely do not want to provide the same user with a different response to a previously asked question, which means some form of caching will be done. Assuming even a low end of the 30-60% cache hit rate range meaningfully decreases the cost estimates that have been floating around.

Net-net, the negative revenue and cost impact that investors had feared has largely dissipated. The stock price move recently kind of reflects that, but if anything, Google has more optionality around AI than anyone thought or expected.

If you’re finding this newsletter interesting, share it with a friend, and consider subscribing if you haven’t already.

Always feel free to drop me a line at ardacapital01@gmail.com if there’s anything you’d like to share or have questions about. This is not investment advice, so do your own due diligence.

This demo, unlike Paris, was not live so the company probably heavily vetted demo.

You can export to other browsers, but the experience is very janky in my experience.

Brilliant post with excellent support and documentation!💡

This article changed my mind about the future of search and Google's prospects.

Thanks!